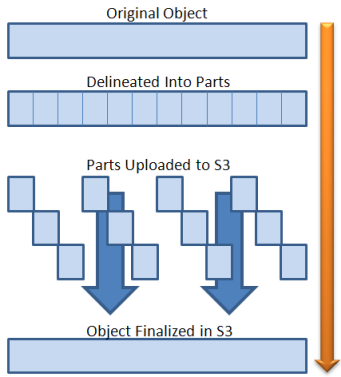

When You Have to Go for Multipart Upload

When uploading a >5Mb file to an AWS S3 bucket, the AWS SDK/CLI automatically splits the upload to multiple HTTP PUT requests. It's more than efficient, allows for resumable uploads, and — if one of the parts fails to upload — the part is re-uploaded without stopping upload progress.

Nonetheless, there lies a potential pitfall with multipart uploads:

If your upload was interrupted before the object was fully uploaded, you're charged for the uploaded object parts until yous delete them.

Every bit a result, you may experience a hidden increase in storage costs which isn't apparent right away.

Read on to see how to identify uploaded object parts, and how to reduce costs in the issue that at that place are unfinished multipart uploads.

How can I find uploaded object parts in AWS S3 Panel?

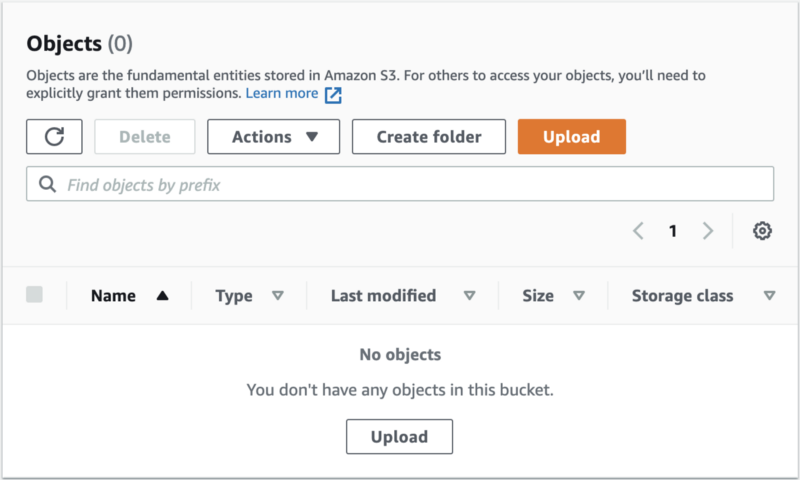

This is the interesting office, yous can not see these objects in AWS S3 Console.

For the purpose of this article, I created an S3 bucket and uploaded a 100Gb file. I stopped the upload process after 40Gb were uploaded.

When I accessed the S3 Console, I could run into that in that location are 0 objects in the saucepan and that the S3 panel is not displaying the 40Gb that were uploaded (multipart)

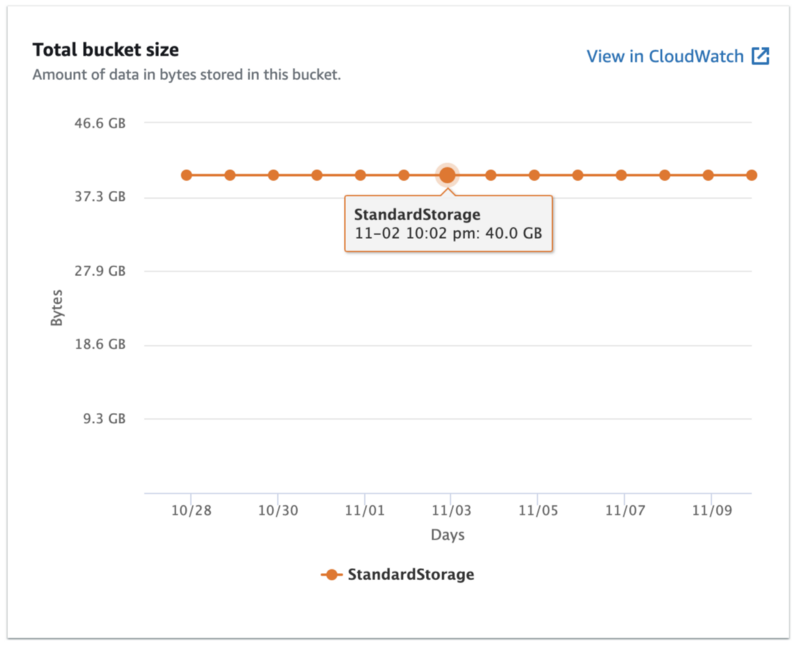

Then I clicked on the Metrics tab, and I saw that the bucket size is 40Gb

It may accept several hours for updated metrics to appear.

This ways that fifty-fifty though you can't run across the object in the panel considering the upload didn't finish, you are however existence charged for the parts that were already uploaded.

How is this addressed in the existent world?

I've approached several colleagues at various companies that run AWS account with a substantial AWS S3 monthly usage.

The majority of these colleagues all had between +100Mb upwards to +10Tb of unfinished multipart uploads. The general consensus was that the larger the S3 usage and the older the account, the more incomplete objects existed.

Calculating the multipart function-size of a unmarried object

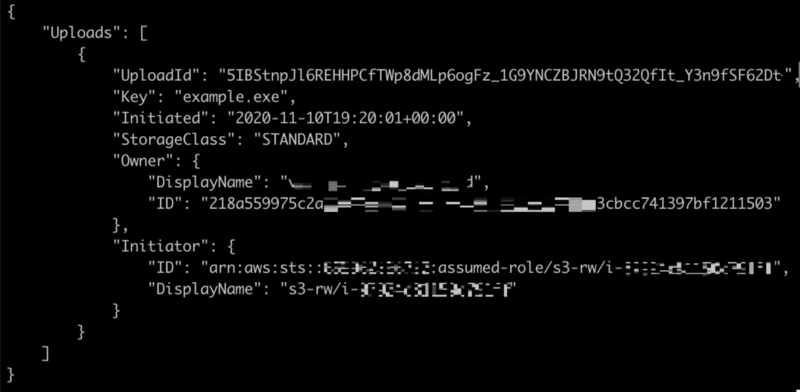

Commencement, inside the AWS CLI, list the current multipart objects with the following command:

aws s3api list-multipart-uploads --bucket <bucket-name>

This outputs a list of all the objects that are incomplete and accept multiple parts:

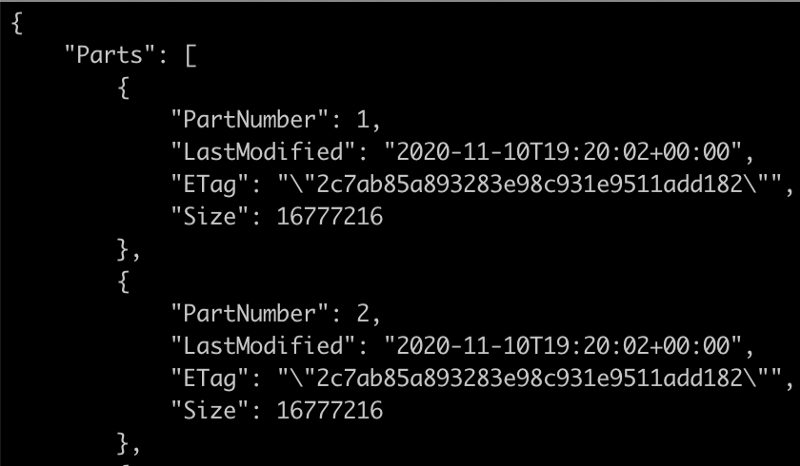

So, list all the objects in the multipart upload past using the list-parts command with the "UploadId" value:

aws s3api list-parts --upload-id 5IBStnpJl6REH... --bucket <bucket-name> --primal instance.exe

Adjacent, sum the size (in bytes) of all the uploaded parts and convert the output to Gb by using a JQ (command-line JSON processor):

jq '.Parts | map(.Size/1024/1024/1024) | add'

If you want to delete a multipart upload object manually, yous tin can run:

aws s3api abort-multipart-upload --bucket <bucket-name> --key example.exe --upload-id 5IBStnpJl6REH...

How to stop existence charged for unfinished multipart uploads?

Past setting at the saucepan level, you tin can create a lifecycle rule that volition automatically delete incomplete multipart objects after a couple of days.

"An S3 Lifecycle configuration is a prepare of rules that define actions that Amazon S3 applies to a grouping of objects." (AWS documentation).

Below are ii solutions:

- A manual solution for existing buckets, and

- An automatic solution for creating a new bucket.

Deleting multipart uploads in existing buckets

In this solution, you'll create an object lifecycle rule to remove old multipart objects in an existing bucket.

Caution: Be conscientious when defining a Lifecycle dominion. A definition mistake may delete existing objects in your saucepan.

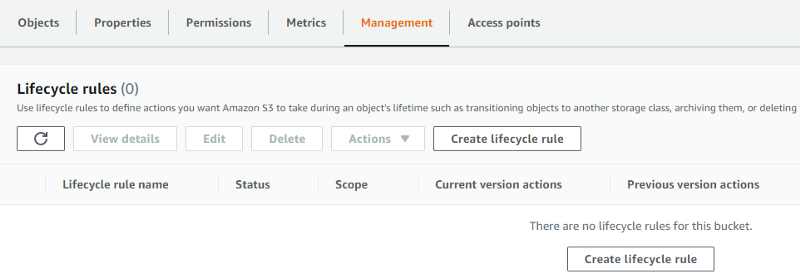

Outset, open the AWS S3 panel, select the desired bucket, and navigate to the Management tab.

Under Lifecycle rules, click on Create lifecycle rule.

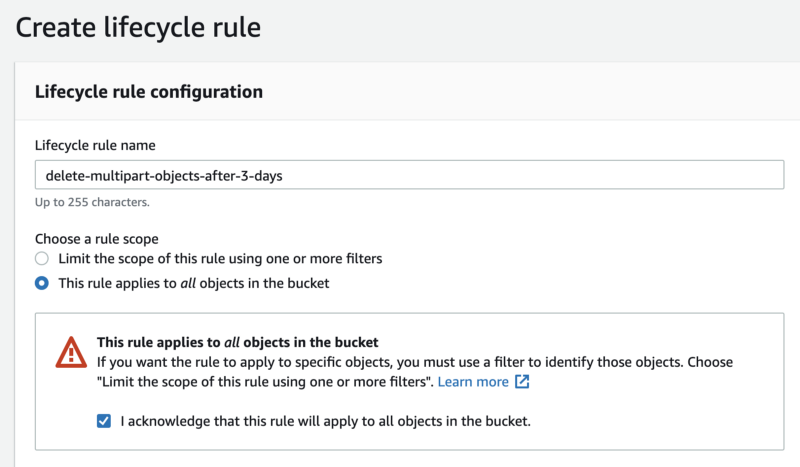

Then name the lifecycle rule and select the rule'southward telescopic for all objects in the bucket.

Check the box for "I acknowledge that this dominion will apply to all objects in the bucket".

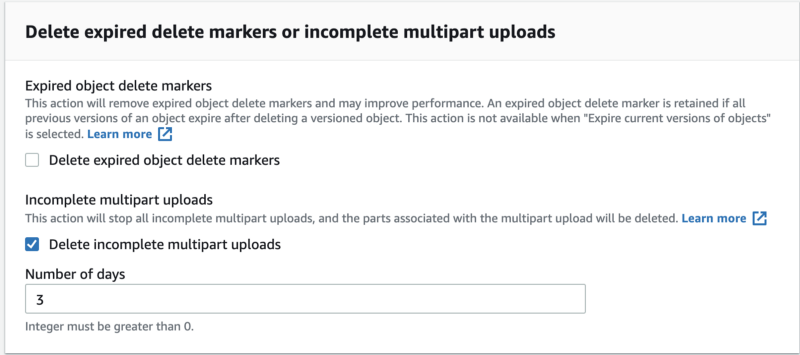

Next, navigate to Lifecycle rule actions and check the box for "Delete expired delete markers or incomplete multipart upload".

Check the box for "Delete incomplete multipart uploads", and gear up the Number of days co-ordinate to your needs (I believe that iii days is plenty fourth dimension to finish uncompleted uploads).

Post successful completion of the steps above, the multipart files that were uploaded will exist deleted, but not immediately (information technology'll take a lilliputian while).

Two things to note:

- Delete operations are costless of accuse.

- One time you accept defined the lifecycle rule, you are not charged for the data that will be deleted.

Creating a lifecycle rule for new buckets

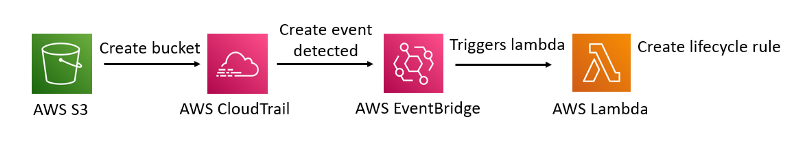

In this solution, yous'll create a lifecycle rule that applies automatically every time a new bucket is created.

This uses a straightforward lambda automation script, which is triggered every time a new saucepan is created. This lambda function implements a lifecycle dominion for deleting all the multipart objects which are 3 days one-time.

Note: Since EventBridge runs just in the region in which it is created, you must deploy the lambda function in each region yous operate.

S3 Management Panel — Watch Video

How to implement this automation?

- Enable AWS CloudTrail trail. Once you configure the trail, yous can use AWS EventBridge to trigger a Lambda role.

- Create a new lambda function, with Python 3.8 as the function Runtime.

- Paste the code below (Github gist):

4. Select create office.

5. Scroll to the superlative of the folio, under Trigger select 'Add together trigger' and for the Trigger configuration, choose EventBridge.

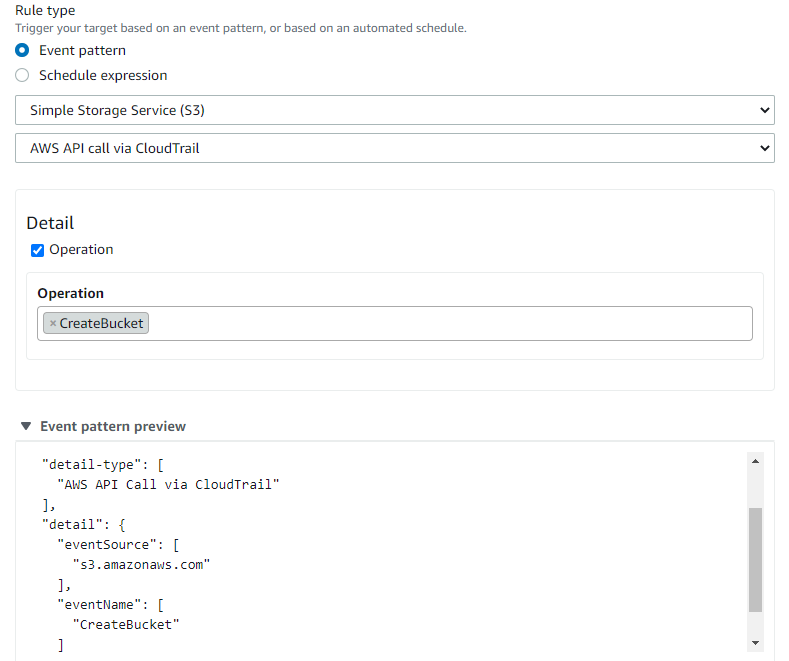

Then create a new rule and give the rule a name and description.

6. Cull Consequence pattern in rule type, cull Simple Storage Services (S3) and AWS API call via CloudTrail in the 2 boxes beneath

Nether the Detail box, choose CreateBucket in Functioning

Scroll downward and click the Add button.

vii. Scroll downwards to the Bones settings tab, and select Edit → IAM role and attach the policy as given below.

This policy will let the lambda function to create a lifecycle configuration to all the buckets in the AWS business relationship.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Permit", "Action": "s3:PutLifecycleConfiguration", "Resources": "*" } ] } 8. Create a bucket to check that the lambda function is functioning correctly.

9. That'southward it! Now every time you create a new bucket (in the region you configured), the lambda function volition automatically create a lifecycle for that bucket.

Thanks for reading! To stay continued, follow usa on the DoiT Engineering Blog , DoiT Linkedin Channel , and DoiT Twitter Channel . To explore career opportunities, visit https://careers.doit-intl.com .

Source: https://www.doit-intl.com/aws-s3-multipart-uploads-avoiding-hidden-costs-from-unfinished-uploads/

0 Response to "When You Have to Go for Multipart Upload"

Post a Comment